About AI and context

By Admin

(1).jpg?alt=media&token=2e8646ef-c28b-4864-9701-41b079c0cd1a)

Hello! Folks!

We continue our series of articles about AI (Artificial Intelligence) and how to use it. Today's article will be more theoretical. We will try to figure out what models are, their types, how to use them, and what features they have.

I hope you find it interesting, so let's go!

And so, as usual (as it seemed in school), let's understand the concept of AI models.

Artificial Intelligence — It's Not Magic, It's Math on Steroids

When you hear "AI model," it might seem like we're talking about something like a robot that thinks like a human. But in reality, it's much simpler (and more complex at the same time). An AI model is a mathematical construct that has been trained on data to later predict, classify, or even generate new texts, images, or codes.

For example, if you use a translator, get recommendations on Spotify, or filter spam in your mail, there's one or more of these models at work. But it's important to understand: a model is not the algorithm itself, but the result of its application to data.

The process usually looks like this: you take an algorithm (e.g., gradient boosting, neural network, or SVM), "feed" it data, and get a model. This model can then independently make decisions, such as determining whether there's a cat in a photo or if it's just a fluffy blanket.

In this article, we'll delve deeper: how different models differ, why foundational models (like GPT, Claude, etc.) are needed, and why you can't make even a simple chatbot today without powerful GPUs.

How an AI Model "Remembers" Information: Vectors, Spaces, and Mathematical Magic

Okay, we already know that a model is the result of training on data. But a logical question arises: how does it store all this? Does it not store it like Google Docs?

No. An AI model remembers nothing "human-like." It doesn't know that a "cat" meows or that "pizza" is tasty. Instead, it envisions the world through vectors—mathematical objects consisting of sets of numbers. Roughly speaking, each word, image, request, or even concept is converted into a digital code—a set of values in a space with hundreds or thousands of dimensions.

🧠 Example:

text1"cat" = [0.12, -0.98, 3.45, …]

When a model "thinks" about the word "cat" (usually it creates vectors for entire expressions), it doesn't work with text but with its vector representation. The same goes for the words "dog," "fluffy," "purrs," etc.

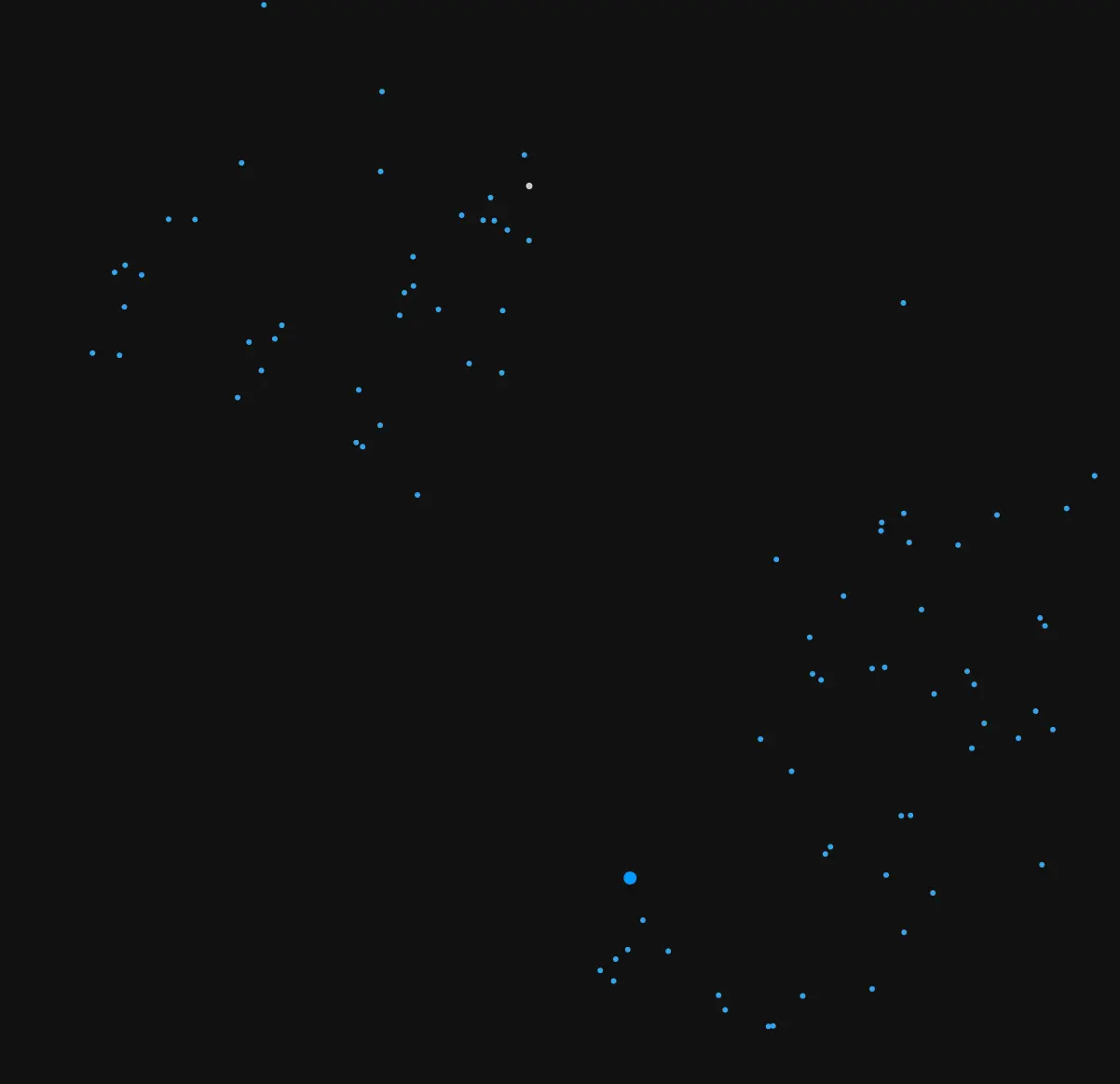

(Vector visualization. Top left - vectors of texts about prompting. Bottom right - vectors of texts about the Belarusian language.)

Interestingly, vectors close in this multidimensional space represent concepts that are close in meaning. For example, if the vector "cat" is near "dog," it means the model has learned to see something common between them (animals, domestic, fluffy).

How Are Vectors Compared?

The model measures cosine similarity or Euclidean distance between vectors. Simply put, it calculates how "parallel" or "close" vectors are to each other. The smaller the distance or the larger the cosine, the stronger the connection between concepts.

For example, in large language models (LLM) like GPT:

- "Minsk" + "Belarus" ≈ "Paris" + "France"

- "Book" + "read" - "paper" ≈ "electronic"

And Why Is This Needed?

Everything, from understanding user questions to generating answers, happens through manipulating these vectors. If you write in chat: "suggest a movie like Inception," the model searches for the vector "Inception," finds its neighbors in the space (e.g., "Interstellar," "Tenet"), and based on this, generates a recommendation.

Not All AI Models Are the Same: How They Are Divided

The variety of models is impressive. You can find models that analyze maps or detect diseases from images.

Let's try to typify AI models.

Starting with the fact that models can be divided by the types of data they work with:

🧠 Language Models (LLM)

Work with text: understand, continue, analyze, generate. Modern examples:

- Qwen 3 — supports both dense and Mixture-of-Experts architectures.

- Gemma 3 — compact, efficient, works even on 1 GPU.

- DeepSeek-R1 — focuses on reasoning and logic.

- LLaMA 3.3 / 4 — new open-source LLM.

👁 Visual Models (Computer Vision / Multimodal Vision)

Work with images: recognize, analyze, generate.

- LLaMA 4 Vision — understands images and can answer questions based on visual context.

- Gemma Vision — scalable visual model from Google.

- DALL-E

🎤 Audio and Speech Models

For speech recognition, voice generation, emotions, etc. (Currently, there are few open-source competitors to Whisper or VALL-E, but they are expected.)

🔀 Multimodal

Capable of processing multiple types of data: text + image, text + audio, etc.

For example, LLaMA 4 — combines a language model with the ability to analyze images and supports agents (more on them in future articles).

🧰 Models Can Also Be Divided by Functionality:

- Task-specific models Finely tuned for a single task (e.g., SQL generation, automatic medical analysis).

- General-purpose models Perform multiple tasks without specific adaptation. These include all modern flagship models: Qwen 3, LLaMA 3.3, Gemma 3, DeepSeek-R1.

- Tool-augmented models Can use tools like calculators, search, databases, even other AIs. Example: GPT-4 Turbo with tools, LLaMA 4 Agents, DeepSeek Agent (based on R1).

🏋️ Models Can Be Divided by Size Based on the Number of Parameters They Support (B - Billions):

- Tiny / Small (0.6B – 4B parameters) — works on local devices.

- Medium (7B – 14B) — requires GPU, works stably.

- Large (30B – 70B) — for data centers or enthusiasts with clusters.

- Ultra-large (100B – 700B+) — requires special equipment.

Let's Discuss Features of Models You Might Not Know.

🧠 A Model Is Not Human. It Remembers Nothing

One might think that if a model answers your questions taking into account previous ones, it "remembers" the conversation. But that's an illusion. In reality, a model is a mathematical function that has no memory in the human sense.

Context Is Where All Memory "Lives"

Every time you send a request to a model, a so-called context is transmitted with it—the text of previous conversations, documents, instructions. It's like giving a person a cheat sheet before asking something. And if the next request doesn't contain the previous text, the model "forgets" everything.

📌 The model doesn't store any information after responding. Everything it "knows" is what you provided at the current moment.

Why Is This Important?

Because it means the model cannot remember users, the context of the conversation, or events. The model only remembers the data it was trained on during training or fine-tuning. If it seems like it "remembers," it's not the model's merit but the system around it that:

- stores context,

- dynamically loads it,

- or uses vector databases or other tools to retrieve needed information.

🧊 The Model Is "Frozen Math"

To simplify: a model is a function that converts input (request + context) into output (response). And that's it. There's no internal dynamics that change between calls. (In simpler models, you can notice that the same prompt will yield the same answer)

It's like a calculator: you enter 2 + 2 and get 4. If you want to get 4 again, you need to enter 2 + 2 again.

Everything related to "memory," "personal history," "recall," are architectural add-ons. For example, agents with "memory" work like this:

- The entire dialogue is stored externally (in a database, file, or vector system).

- For each new request, the agent retrieves relevant fragments from "memory."

- It adds them to the context and only then passes everything to the model.

The model itself doesn't even "know" this text is from memory—for it, it's just another part of the input.

🙅♂️ Can a Model Learn During a Conversation?

No. A typical AI model (including GPT, Claude, LLaMA) doesn't change itself during operation. For it to "learn" something, retraining or fine-tuning must occur, and that's a separate process that doesn't happen during a chat.

Even if the model answers incorrectly 100 times, it will continue to do the same until you create a new model or change the context.

📏 Context Isn't Infinite: Why a Model Can't "Read Everything"

One of the most common user misconceptions about AI is the illusion that a model can work with "the whole book," "the whole database," or "a lot of documents at once." But that's not true. Models have a strict limit on the context size they can process at one time.

What Is Context in a Technical Sense?

Context is the entire set of information you provide to the model in one call: your request, instructions, documents, dialogue history, etc. It's not just "text" but a set of tokens—special units to which text is broken down for processing.

Example:

The word "cat" is 1 token. The word "automobile" might contain 2-3 tokens. The English "The quick brown fox jumps over the lazy dog." is 9 tokens.

How Many Tokens Can Modern Models "Hold"?

- GPT-3.5 - 4,096 tokens

- GPT-4 - 8,192 - 32,000 tokens

- GPT-4o - up to 128,000 tokens

- Claude 3 - up to 200,000 tokens

- LLaMA 3 - usually - 8k - 32k tokens

128,000 tokens is about 300 pages of text. Seems like a lot? But it quickly runs out when you add, for example, technical documentation or code.

🧨 What Happens If You Provide Too Much?

- The prompt won't fit—the model will refuse to process it or cut off part (usually the beginning) and some data will be lost.

- If you supply overly long texts, important parts may be "pushed" out of visibility.

- Lower accuracy—even if all information fits, the model can "get lost" in volume and miss important parts.

Why Not Just Make "Infinite Context"?

The problem is that all tokens are processed together—and the more there are, the:

- more memory is required on the GPU (Processors that process data),

- more time computation takes,

- worse the attention (attention) works: the model "spreads out" and doesn't understand what to focus on.

How to Work with Large Data?

If the text doesn't fit into the context, there are solutions:

- 🔍 RAG (Retrieval-Augmented Generation) — selecting the most relevant pieces before each request

- 📚 Vector search — finding text similar in meaning

- 🪓 Splitting — you provide information in parts

- 🧠 Agent with memory — uses an external database to "recall" previous material

✨ Let's Summarize…

Phew! If you've read this far—you're almost an expert 😎 Let's briefly go over the main points again:

- ✅ An AI model isn't magic; it's math that has learned from data

- ✅ All a model's "knowledge" is not stored like human memory but in vectors

- ✅ The model doesn't remember you—all "memory" lives in context

- ✅ There are many different models: by data type, functionality, and size

- ✅ Context is limited—and that's not a bug, but a feature you need to work with

- ✅ "Teaching a model in conversation"—is still not quite reality

So, if it seems like AI "understands" or "remembers," remember that you're actually facing a very smart calculator, not a virtual Jan Bayan with amnesia 🙂